Bhoos Games organized the SmartBots Coding Challenge, a four-month-long competition inviting developers and coding enthusiasts to build an AI bot for a card game.

To keep participants engaged throughout the competition, we designed a centralized dashboard, used by 870 participants across 725 teams to build, submit, and track their bots. Its strong impact led to the dashboard’s return in the second year with enhanced features and continued success.

To make the challenge engaging for participants, the dashboard needed to address several key challenges:

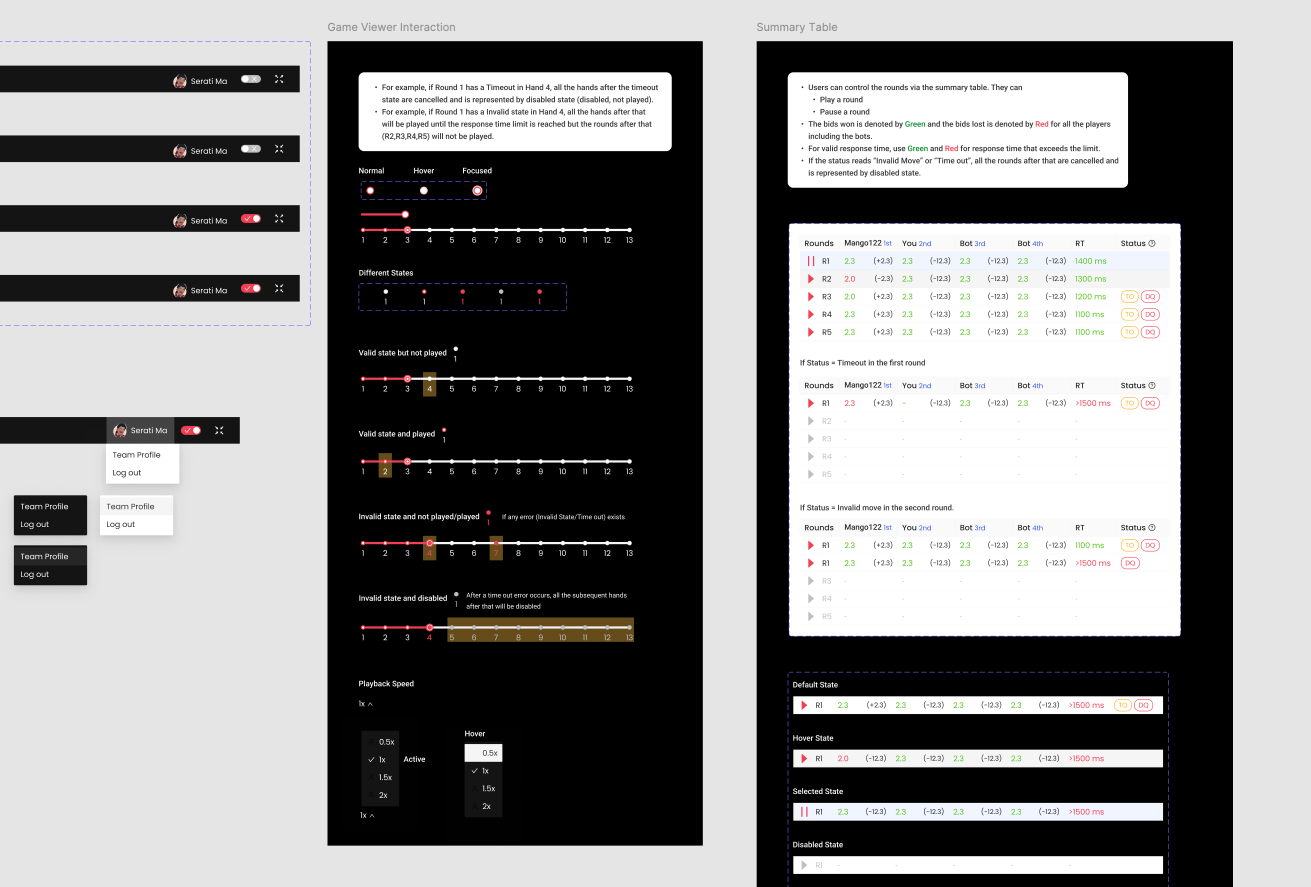

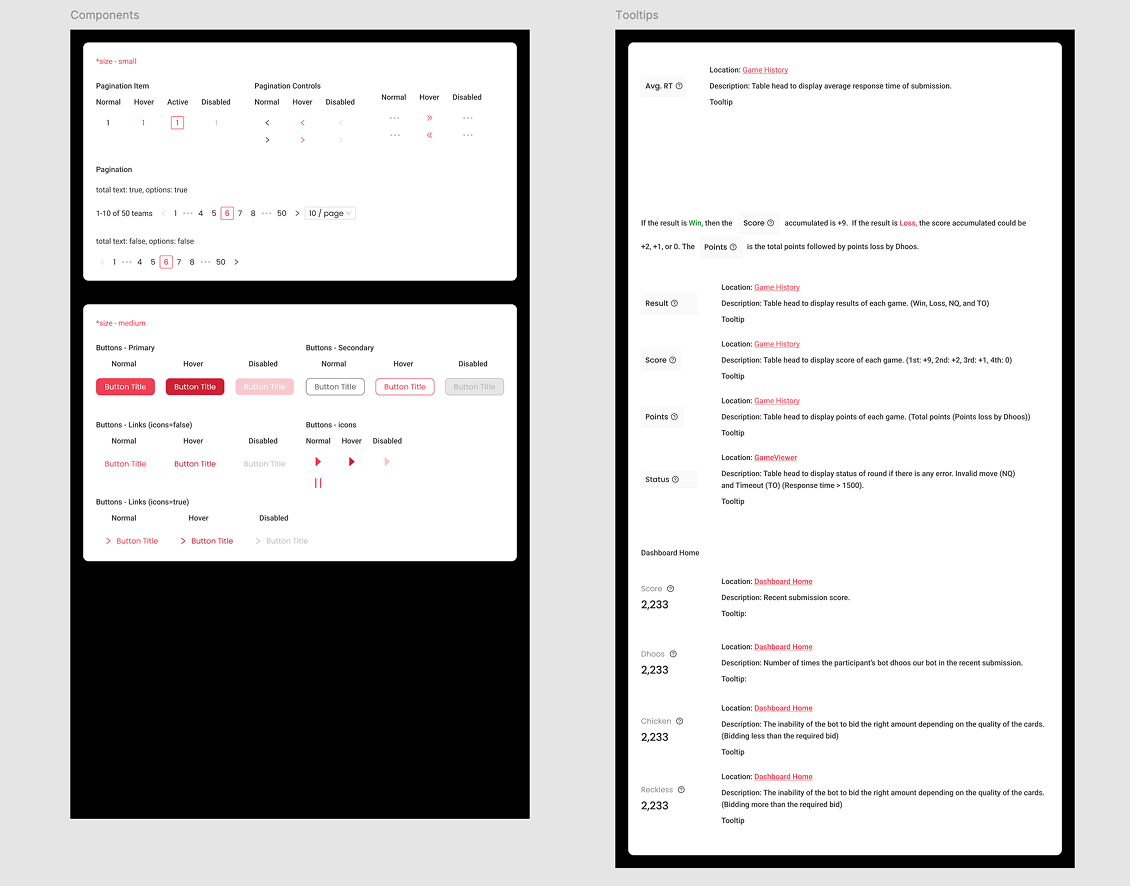

Designing the dashboard was a collaborative and iterative process, focused on understanding developer needs, defining objectives, and refining features based on internal feedback.

Objectives Defined:

The feature ideation process was iterative and heavily influenced by the technical team’s input. My role included:

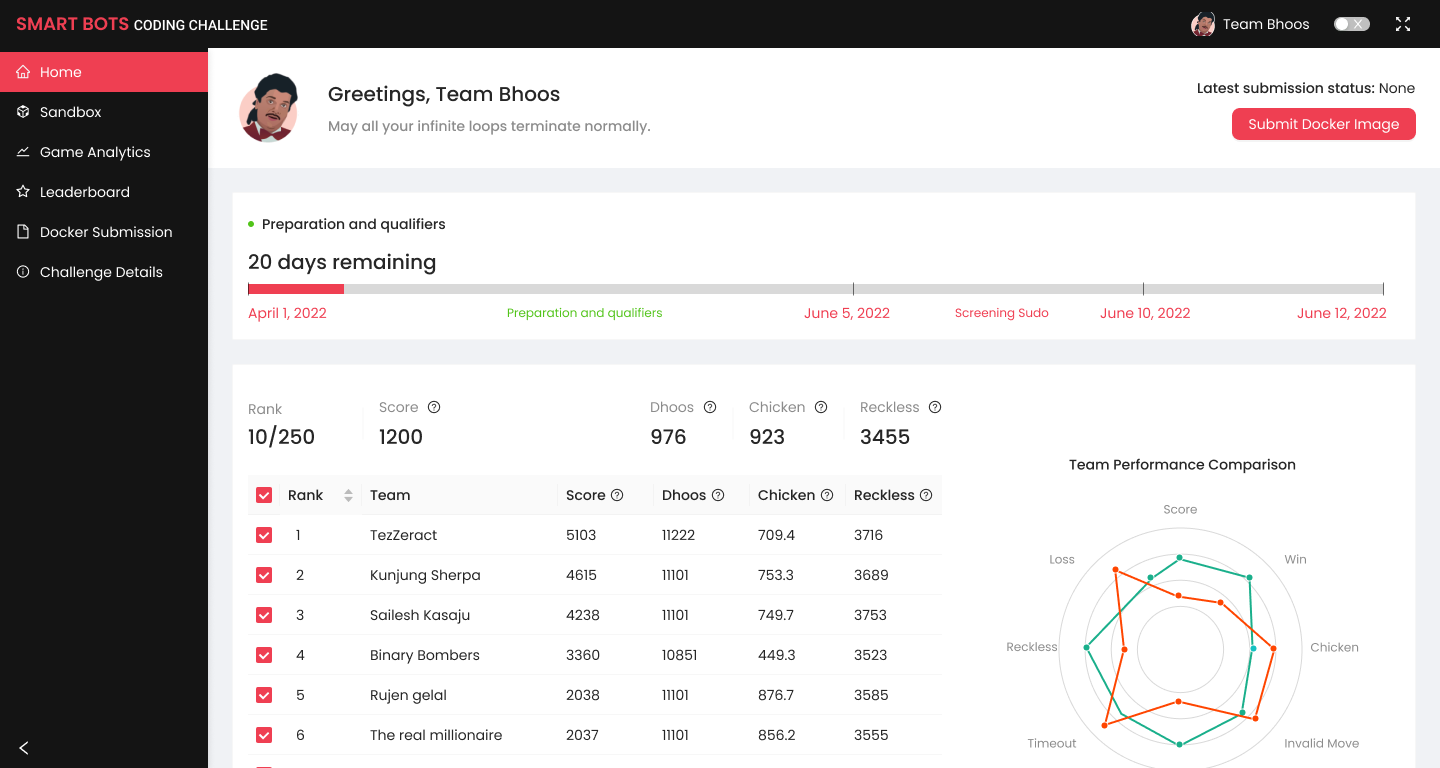

We designed a dashboard to keep participants engaged in the 4-month long competition and enable them to improve their bots at every stage.

Dashboard Home allows participants to easily track and compare their performance with other competitors.

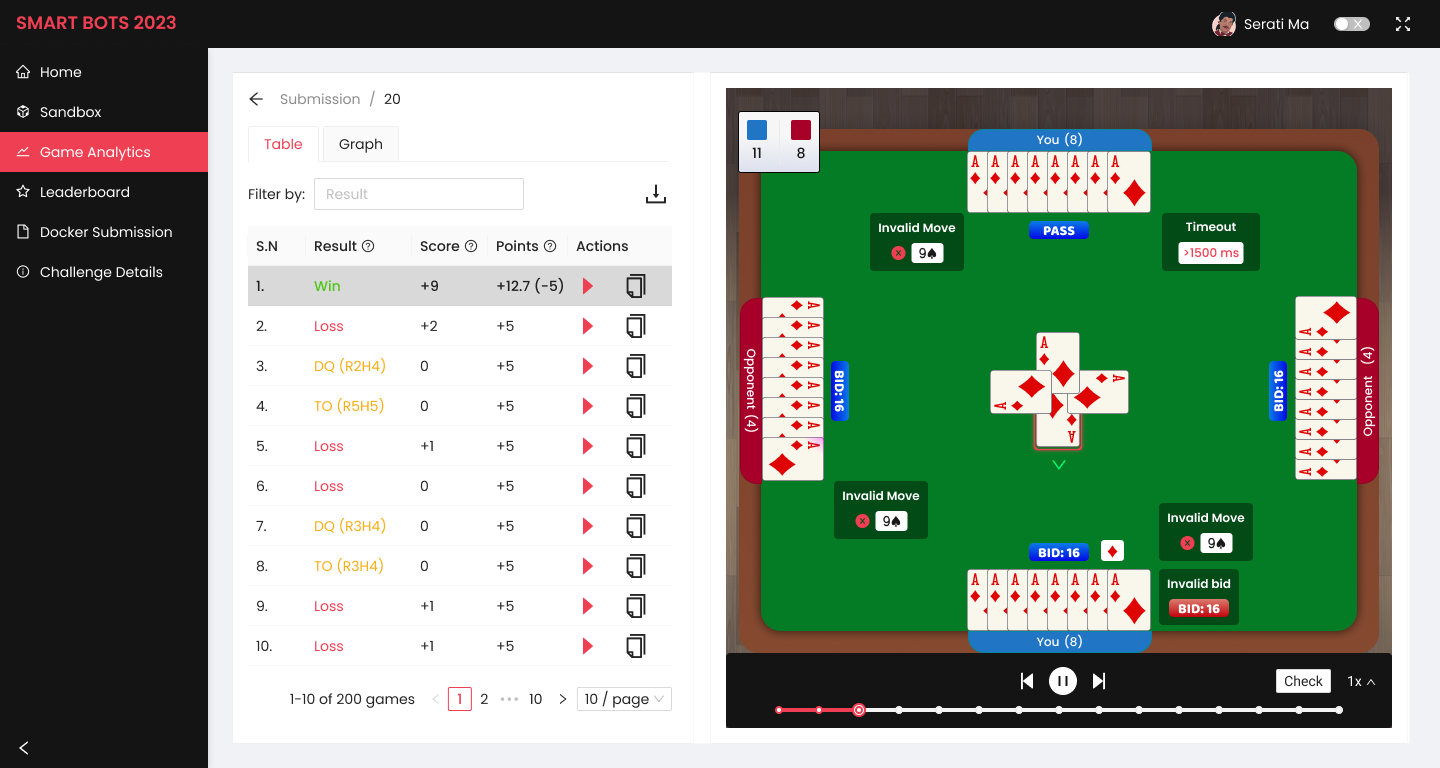

An intuitive analytics tab provides insights into bot performance across games, enabling participants to identify strengths and areas for improvement.

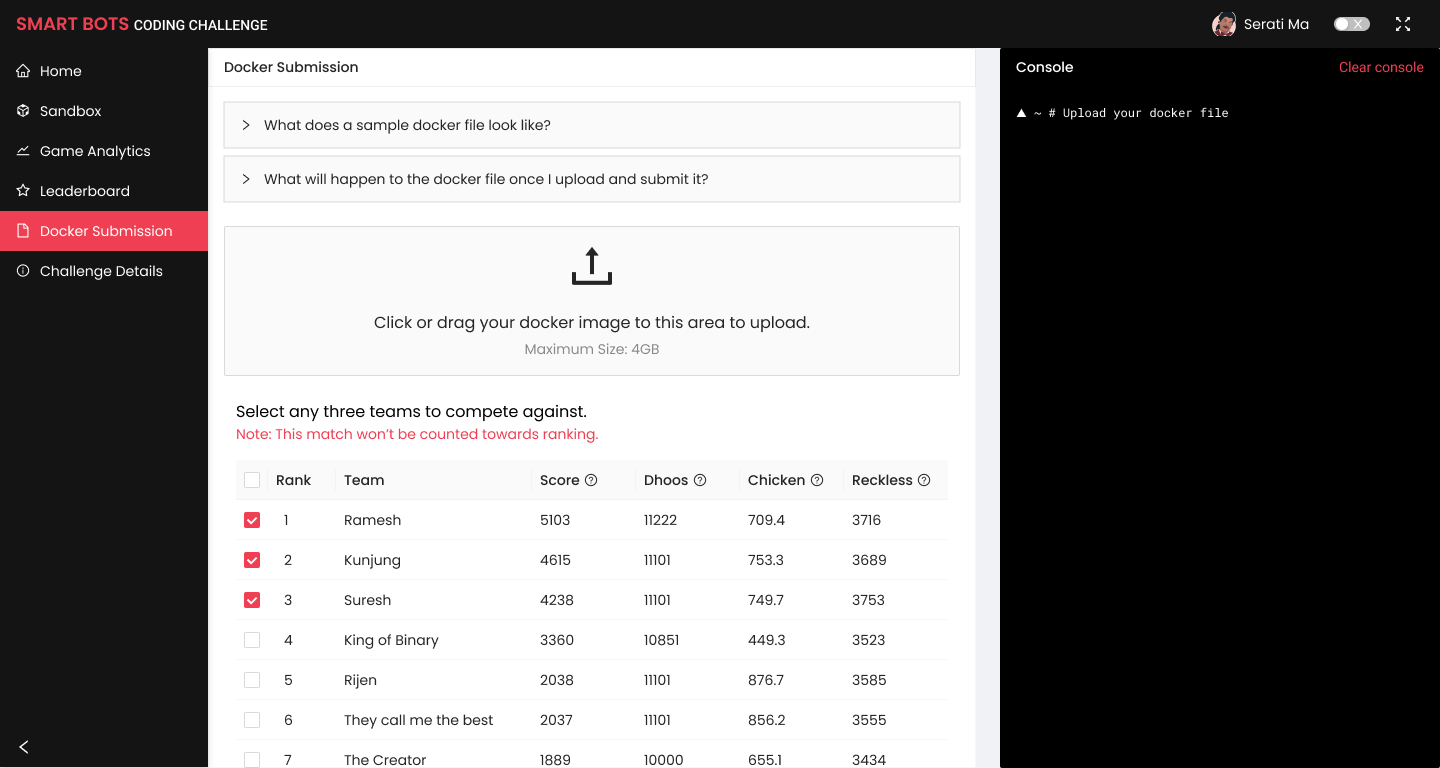

A Docker-based system ensures participants can submit and run their bot code safely on the server.

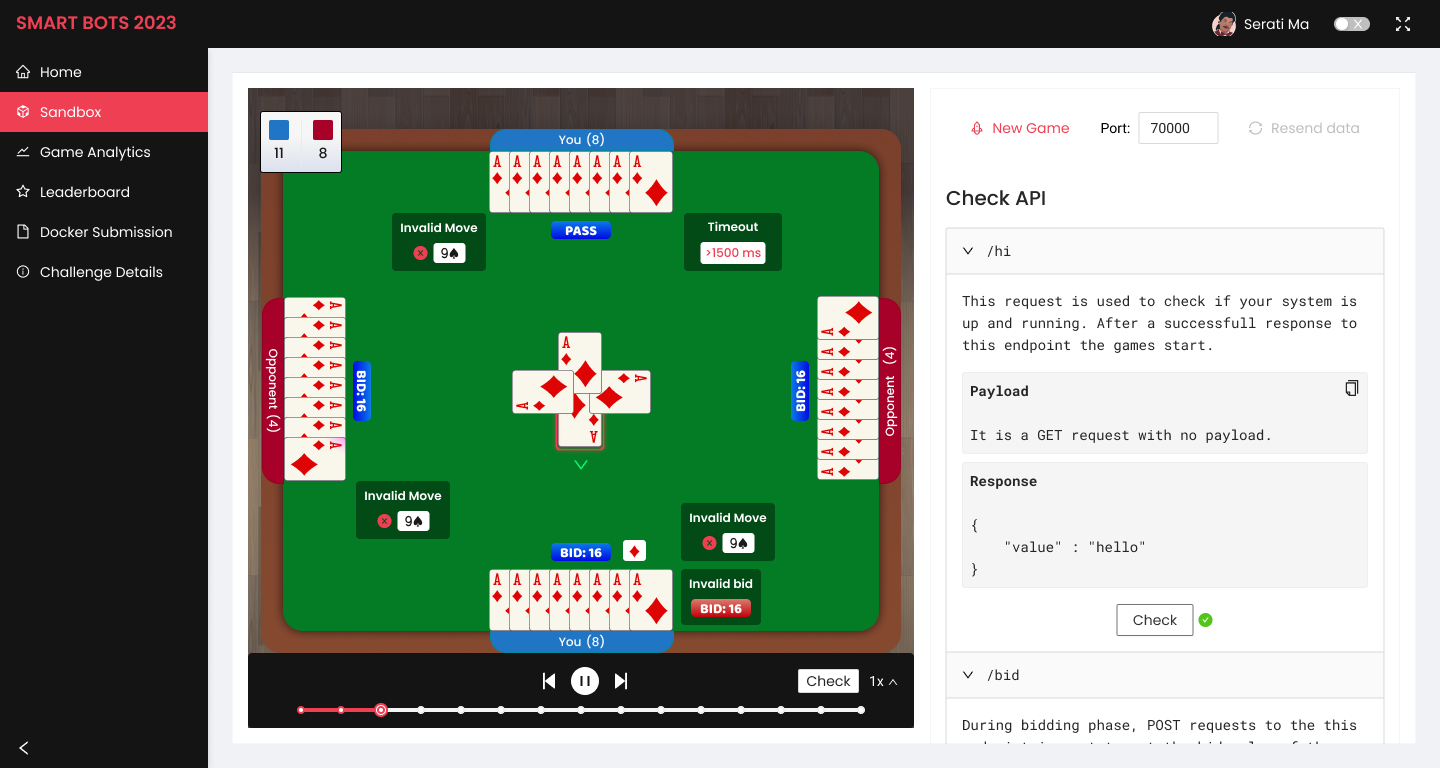

Sandbox enables participants to test and optimize their bots without impacting official rankings.

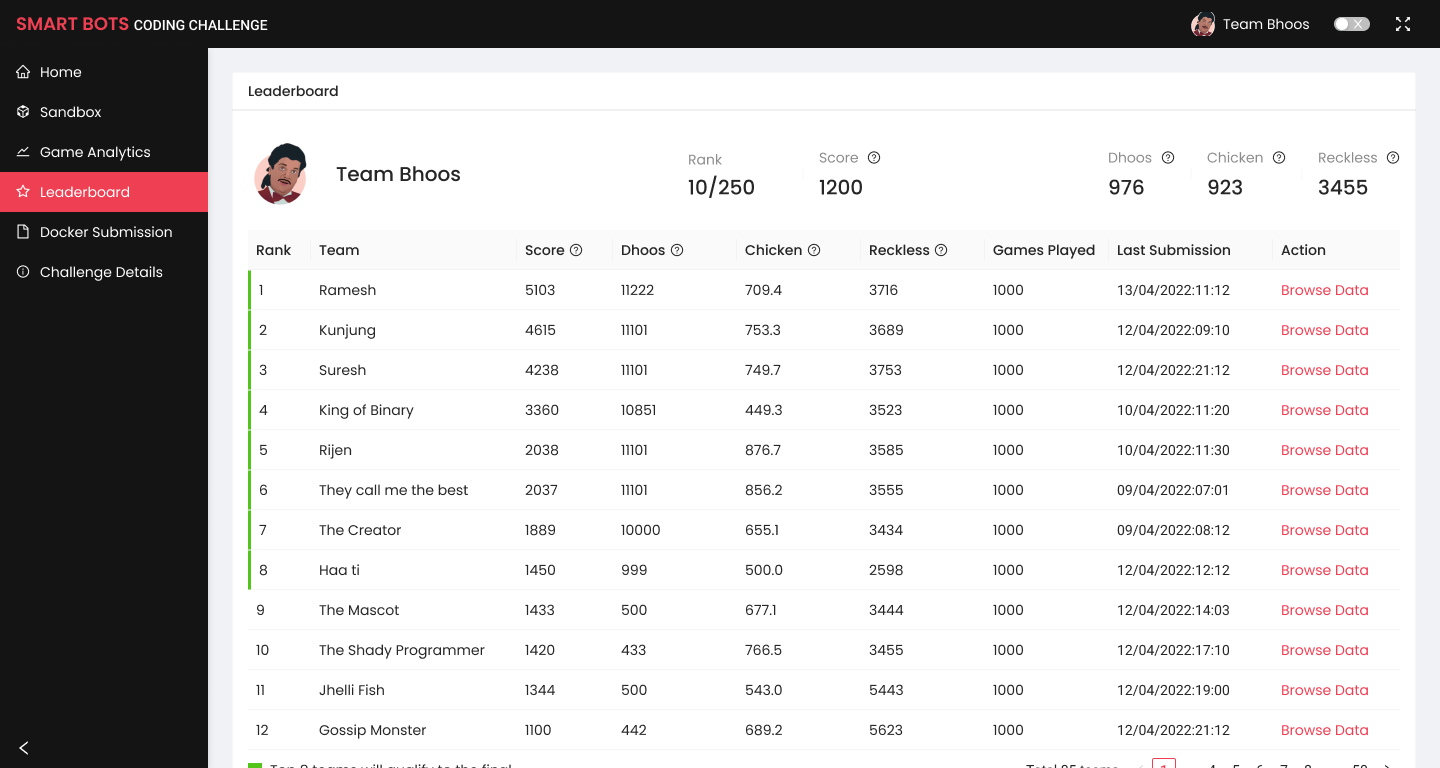

Leaderboard displays real-time rankings and detailed performance metrics to build the competitive energy.

The dashboard played a crucial role in the success of the first SmartBots Coding Challenge. Although analytics on dashboard usage was not collected, we observed consistent interaction from participants as they tracked their performance and rankings.

For the competition’s second year, the dashboard was reused with several refined features, resulting in significant engagement and participation: